The Science Behind Emteq Labs’ OCOsense™ Smart Glasses

How can we measure emotion in real time—without a lab or intrusive sensors? Emteq Labs’ OCOsense™ smart glasses offer a powerful solution: glasses equipped with optical sensors that detect subtle facial muscle movements—completely without skin contact.

Using a patented sensing method called Optomyography (OMG), OCOsense™ captures natural expressions like smiles and frowns in everyday environments. This means researchers can study emotional responses more naturally, accurately, and scalably than ever before.

The Technology Behind OCOsense™

At the core of OCOsense™ are infrared-based optical sensors that track tiny skin movements caused by underlying muscle activity. Operating at 50 Hz, they record 3D movement (X, Y, Z) with precision—no cameras or electrodes required.

Unlike traditional EMG systems that rely on sticky electrodes, OMG sensors are contact-free, functioning effectively from 4 to 30 mm away [1]. That means greater comfort, hygiene, and long-term wearability for participants in natural settings.

And with Bluetooth Low Energy (BLE) and onboard buffering, OCOsense™ is truly wireless [1].

Sensor Placement & Muscle Targeting

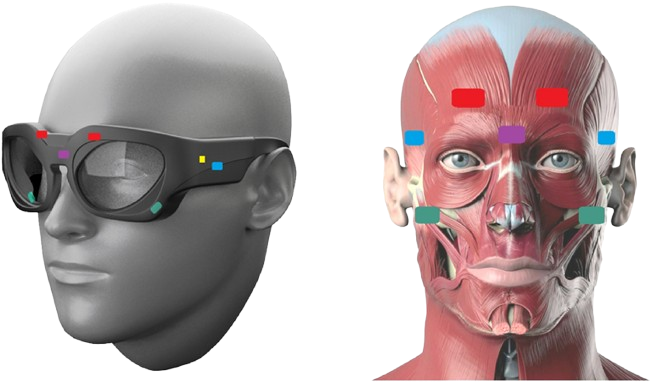

The glasses are embedded with OCO™ sensors, each strategically positioned to capture key muscle groups involved in facial expressions. Here's how they’re laid out:

● Forehead (left & right): Over the frontalis muscle, detecting eyebrow raises

● Cheeks (left & right): Over the zygomaticus major, capturing smiles

● Glabella (center brow): Targets the corrugator/procerus to distinguish between frowns and raises

● Temples (left & right): Serve as reference points to help eliminate head motion artifacts

A 9-axis IMU and altimeter sit in the right temple, enabling the glasses to distinguish facial expressions from general head movement, and also to estimate head posture and orientation [1, 4].

Expression Recognition — Backed by Science

Sensor placement has been rigorously validated [3]:

● Cheek sensors picked up cheek raises, smiles and eye-squeezes

● Brow sensors tracked frowns and eyebrow raises

● Temple sensors and the IMU filtered out head motion

● A trained machine learning model classified expressions with 93% accuracy (F1-score: 0.90) [3].

This means OCOsense™ doesn’t just track movement—it understands what those movements mean.

Real-World Applications

Mental Health & Rehab

Track subtle facial changes in people recovering from stroke, facial palsy, or experiencing depression—outside the clinic, passively [2].

Behavioral Science & Neuroscience

Collect high-quality expression data in everyday environments. Study natural emotional reactions, not just lab-based responses.

Emotion-Aware Tech & XR

Add emotional intelligence to training, virtual reality, and assistive technologies—no cameras required.

Conclusion

Emteq Labs’ OCOsense™ smart glasses are reshaping how we measure facial expressions and emotion—non-invasively, accurately, and in the real world. With validated sensing technology and smart design, it’s a platform ready for clinical, research, and technology innovation.

Whether you're studying behavior, building emotion-aware tools, or supporting recovery—OCOsense™ helps you go deeper into what the face reveals.

References

[1] Archer, J. A., Mavridou, I., Stankoski, S., Broulidakis, M. J., Cleal, A., Walas, P., Fatoorechi, M., Gjoreski, H., & Nduka, C. (2023). OCOsense™ smart glasses for analyzing facial expressions using optomyographic sensors. IEEE Pervasive Computing, 22(3), 53–61. https://doi.org/10.1109/MPRV.2023.3276471

[2] Broulidakis, M. J., Kiprijanovska, I., Stankoski, S., Archer, J. A., Cleal, A., Fatoorechi, M., Walas, P., Gjoreski, H., & Nduka, C. (2023). Optomyography-based sensing of facial expression derived arousal and valence in adults with depression. Frontiers in Psychiatry, 14, 1232433. https://doi.org/10.3389/fpsyt.2023.1232433

[3] Kiprijanovska, I., Stankoski, S., Broulidakis, M. J., Archer, J. A., Fatoorechi, M., Gjoreski, M., Nduka, C., & Gjoreski, H. (2023). Towards smart glasses for facial expression recognition using OMG and machine learning. Scientific Reports, 13, Article 43135. https://doi.org/10.1038/s41598-023-43135-5

[4] Stankoski, S., Sazdov, B., Broulidakis, J., Kiprijanovska, I., Sofronievski, B., Cox, S., Gjoreski, M., Archer, J., Nduka, C., & Gjoreski, H. (2023). Recognizing activities of daily living using multi-sensor smart glasses. In 2023 46th International Convention on Information, Communication and Electronic Technology (MIPRO) (pp. 360–365). IEEE. https://doi.org/10.23919/mipro57284.2023.10159701

.png)